Local Llama

Deploying, Testing and Benchmarking Llama Models in Google Colab

For a few weeks now, I have been interested in the community at r/localllama. Their goal is to be able to run uncensored large language models locally and privately for their use cases. In this blog, I will talk about how I test and deploy LLMs locally and some models I have been using.

Why Run LLMs Locally?

But first, let’s talk a bit about why you should consider deploying an LLM locally.

Personal Use Cases

Cost-Effectiveness

One of the most compelling reasons to use private and locally deployed LLMs is their cost-effectiveness. Commercial LLM APIs can be prohibitively expensive, especially for individual users or small-scale projects. By deploying an LLM locally, you can significantly reduce or even eliminate these costs, making AI more accessible to a broader range of users.

Freedom from Limitations

Commercial LLM APIs often come with certain constraints, including censorship and limiting the number of requests. These restrictions can hinder the full utilization of the model's capabilities. However, with a locally deployed LLM, you have complete control over the model, enabling you to bypass these limitations.

Efficient Resource Utilization

If you have a GPU, you can quickly load an LLM, ask a few questions, perform some tasks, and then shut it down. This approach allows for efficient use of resources, as you don't need to maintain a dedicated resource running the LLM continuously. It's a flexible and efficient way to leverage the power of LLMs without incurring unnecessary costs. Even if you don’t have your own GPU, you can use the free tier of Colab to deploy an LLM and ask it questions in less than 5 minutes. We will see how you can do this in a later section.

Enhanced Privacy and Security

When you use a locally deployed LLM, your data stays on your machine. This setup provides an additional layer of privacy and security, as you don't have to send your data over the internet to a third-party API. It's a crucial advantage for users who deal with sensitive or confidential information.

For Commercial Use cases

Competitive Performance of Smaller Models

Many smaller LLMs are now performing on par with, or even outperforming, their larger counterparts. This development means companies can leverage these smaller, more efficient models locally without sacrificing performance. It's a game-changer for businesses looking to incorporate AI into their operations without incurring the high costs associated with larger models.

Cost Reduction

The costs associated with LLM API usage can quickly add up for businesses, especially with high-volume or continuous use. By deploying an LLM locally, companies can offload some simpler tasks to the local model, reducing the reliance on costly API calls and thereby significantly cutting operational costs.

Dependability and Performance

LLM APIs may not always offer service level agreements (SLAs), and their performance can vary. They may become slow, their performance may drop, or the APIs may even become deprecated. We have talked about how these issues can make it challenging to take LLM to production reliably. Companies can ensure consistent performance and avoid disruptions to their operations by using a private, locally deployed LLM.

Flexible Service Offerings

Private LLMs can be used to offer an entry-level or free product, with the option to upgrade to a higher level of service using LLM APIs. This strategy allows companies to provide a tiered service offering, attracting a wider range of customers and providing a pathway for users to upgrade as their needs grow.

Customization and Integration

Private and locally deployed LLMs offer more customization and integration with existing systems. Companies can fine-tune the models to suit their needs better and seamlessly integrate them into their existing infrastructure. This flexibility can lead to improved performance, efficiency, and user experience.

How I Run LLMs Locally

The easiest way to run an LLM locally is to use the text-generation-webui project by oobagooba. Their project makes it easy to download any model from huggingface, load it using many popular LLM packages and swap different LLMs to test their performances.

Setting it up is easy. First, clone the package and install all the requirements:

git clone https://github.com/oobabooga/text-generation-webui

pip install -r text-generation-webui/requirements.txt -q

pip install bitsandbytes==0.38.1Next, download the model you want to try out using the project’s script. Here I’m downloading the 13B Llama 2 GPTQ variant by Nous Research:

cd text-generation-webui && python download-model.py NousResearch/Nous-Hermes-Llama2-13b-GPTQFinally, start the server, and visit the gradio URL

cd text-generation-webui && python server.py --shareYou should see a UI like the one below. First, we select and load the model with the correct backend and settings. For this model, make sure you use the ExLlama_HF model loader

Let’s try a query and see the output.

One thing I love about using oobagooba’s project is that it provides the stats for each run. The above screenshot shows that on my hardware I was getting 30 tokens/second.

Some Things to Note When Running Llama Models Locally

LLMs take a lot of memory to run. A good rule of thumb is that 1B parameters require about 2GB of memory for 16-bit floating point precision, about 1GB in INT8 precision, or about 500MB in INT4 precision. The latter two are quantized models.

This means that in colab, you can load 16-bit 7B models or quantized 13B models if you are allocated a V100 GPU. If you are allocated an A100 machine with 40GB memory, you can load 16-bit 13B models. To load anything larger than 13B, you would need a GPU with more VRAM (rare) or a cluster of GPUs (generally, the next biggest models have 30B parameters).

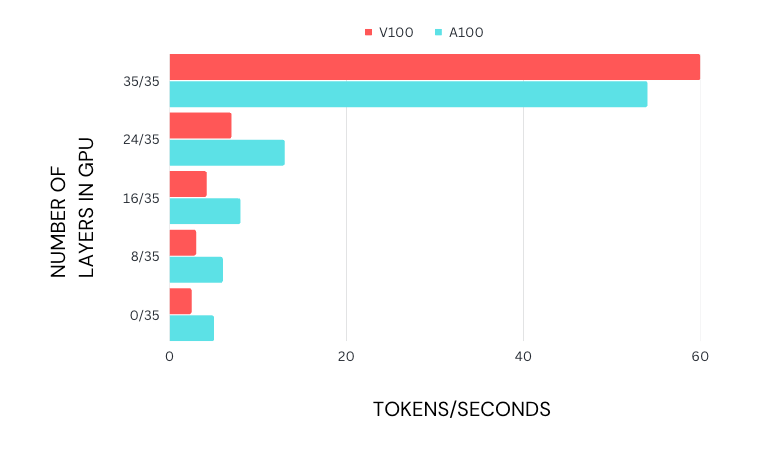

You can consider loading larger models by offloading parts of it to your CPU and using your system RAM or running it completely on your CPU. However, you should know that this will significantly impact your inference speed. For instance, even the best CPU with DDR4 RAM will not get you more than 5 tokens/sec for an INT8 Quantized 7B model (Good discussion about this here) due to memory bandwidth issues and how quickly model weights can be loaded into your CPUs registers.

Another thing to note is your model loader, especially with quantized models. Many model loaders are available, with the two most popular being AutoGPTQ and GGML. You will usually see one of these mentioned in the model name. You’ll know that you haven’t used the correct loader either because the model loading will fail or you will get some gibberish output. The popular formats for non-quantized models are PyTorch and HuggingFace (safetensors). The cool thing about using oobagooba’s project is you can quickly swap between these different backends. You can also use a triton backend for fast inference. However if you load the model with the incorrect backend, you might end up getting gibberish outputs.

Finally, the last thing to note is the model you use.

The most popular model right now is probably Llama-2 and its variants. Meta did not release any quantized models. So a few people quantized them and shared them with the community. Since many of these quantized models are based on the vanilla model, they do not have the censorship that the chat model from Meta has. Some other people also finetuned the model with their own datasets and released that. Depending on your usecase, these finetuned models may perform better. The community has been hard at work releasing models with more features like a larger context length. These are great for commercial usecases or for chatting, where you may want to provide lengthy context to the model.

There are leaderboards that you can use to get a gauge of the performance you can expect from the model.

Testing Local Llama Performance

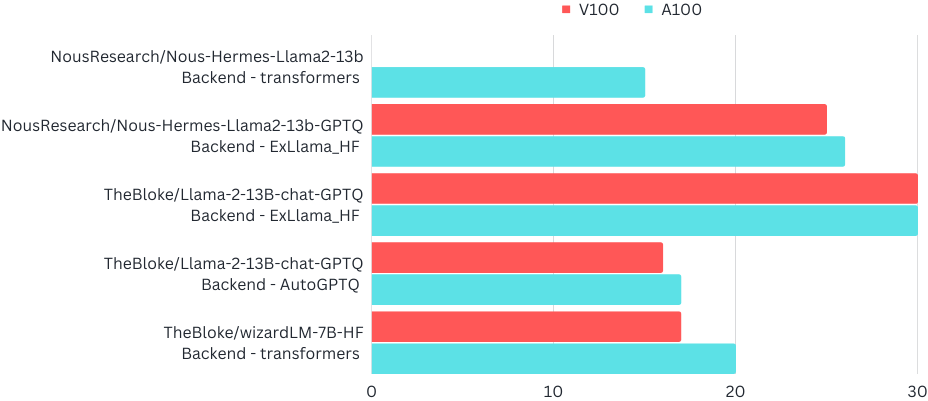

I chose a few popular models of two different sizes: 7B and 13B, and 4 different backends: transformers, ExLlama, AutoGPTQ and ggml. Below are the results of running the models on the different backends.

The cool thing about ggml is that you can not only quantize the model, but also offload some layers to a CPU. This can help you fit large models partly on to a small GPU. For both GPUs, the tokens generated per second (throughput) is highest when all layers are processed on the GPU.