Newsletter: Industry-Specific LLMs and MLOps for Research Labs

The promising potential of customised AI solutions, MLOps architecture for research labs, and the latest trends in the LLM and MLOps landscape

Industry-specific LLMs: A New Era of Customized AI Solutions

As the field of AI continues to mature, we are witnessing an intriguing trend - the rise of industry-specific Large Language Models (LLMs). Will we see LLMs catering exclusively to specific industries, offering tailored AI solutions for unique challenges? The possibility is not far-fetched, and it's an active area of research for both AI researchers and industry professionals.

Imagine the benefits of bespoke LLMs, fine-tuned to cater to each sector's unique language, jargon, and context. For instance, a healthcare-focused LLM could expedite drug discovery, while a finance-oriented model could unravel complex investment strategies in the blink of an eye. The possibilities are boundless, but so are the challenges. An industry-specific LLM trained on curated cleaned data may also be far less susceptible to bias and trust issues than a general LLM. For instance, an LLM trained for healthcare should not be trained with data containing misinformation.

With industry-specific LLMs, businesses can benefit from AI models that understand their domain's jargon, dynamics, and nuances, leading to more accurate and relevant insights. However, developing these models could require access to large, specialized datasets and more focused research efforts. BloombergGPT, a 50B parameter LLM trained on financial data has shown better performance on financial domain tasks than far larger LLMs.

As the race to create industry-tailored LLMs unfolds, stakeholders must consider the ethical implications, privacy concerns, and potential monopolies that may arise. Will this lead to a democratization of AI, or will it further widen the gap between tech giants and smaller players? The answers to these questions may shape the future of AI and its impact on industries across the board.

MLOps for Research Labs: The Key to Streamlining Processes and Ensuring Reproducibility

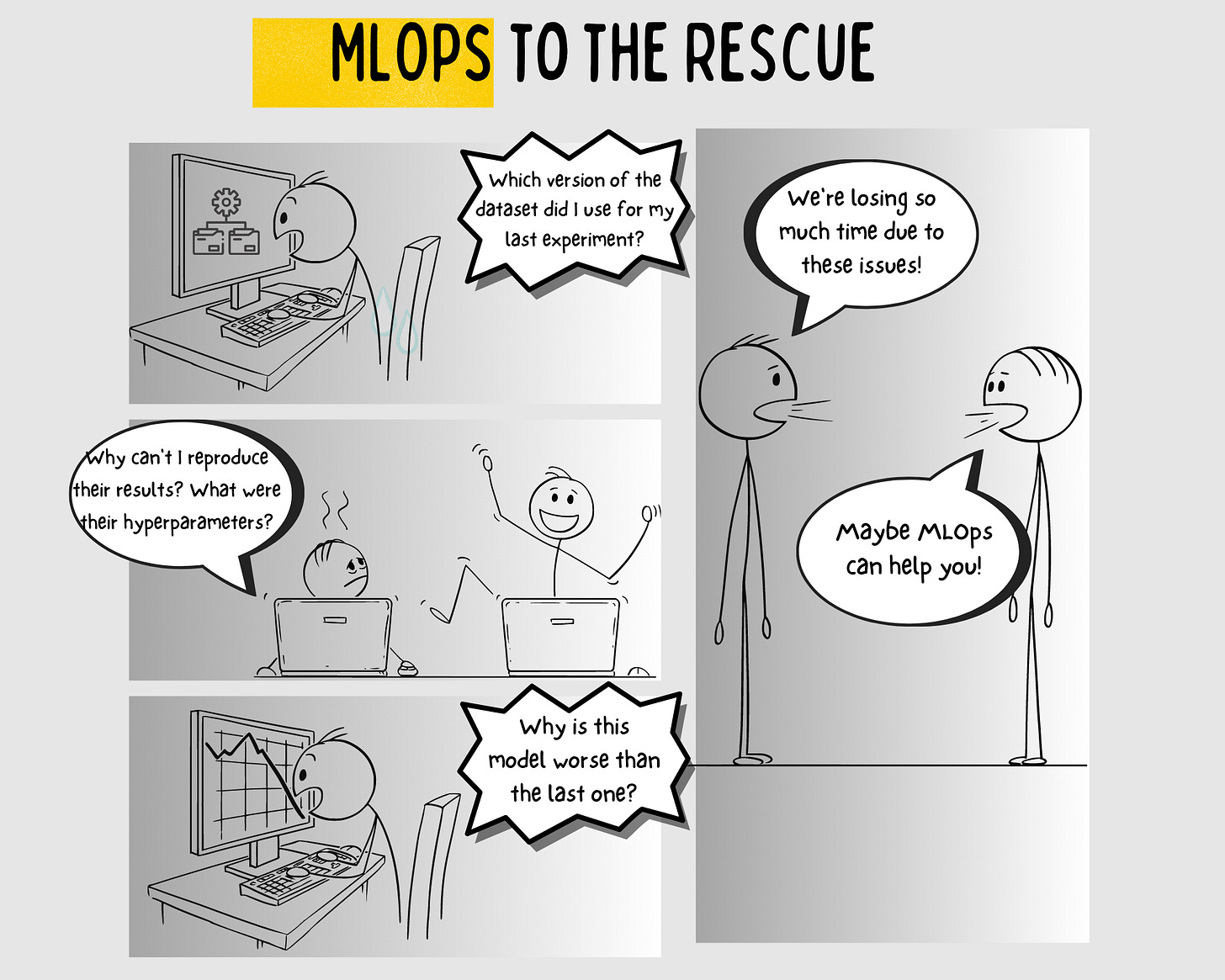

While MLOps has become an indispensable tool for large-scale AI deployments, research labs often struggle to implement these practices due to budget constraints, limited resources, and different priorities. However, research labs can still benefit from MLOps by streamlining processes, ensuring reproducibility, and facilitating collaboration.

In our latest blog, we propose a simple, cost-effective MLOps architecture that research labs can adopt to optimize their workflows without breaking the bank. Discover how MLOps can help research labs manage model and data versioning, maintain reproducibility, and promote collaboration among researchers.

Read the full blog on MLOps for Research Labs here.

MLOps & ML Pulse Check: A Weekly Synthesis

This week in LLMs, a leaked Google document reveals the rapid growth of open-source language models, outpacing tech giants like Google and OpenAI. The lowered barriers to entry in the field have ignited a surge of innovation, challenging traditional players to keep up. So never underestimate the power of Open-Source!

MosaicML Unveils MPT-7B: A New Milestone in Large Language Models; MosaicML released MPT-7B, a decoder-style transformer pre-trained on 1T tokens. It features an optimized architecture, ALiBi for long inputs, and faster training and inference—more info on MPT-7B model card.