Real-Time AI with Groq's LPU

How is Groq able to achieve throughputs of more than 400 Tokens/Sec and will their technology be available to the open-source community?

About a month ago, Groq created a lot of buzz in the world of AI when their APIs were clocking in at as much as 400 tokens per second for some models. These startling speeds achieved by Groq's Language Processing Unit (LPU), herald a new era for real-time AI applications. But the burning question on everyone's mind is, what is the secret behind this unprecedented velocity, and what implications does it hold for consumers, API providers, and companies vested in the development of Large Language Models (LLMs)?

How Groq Achieves Its Breakneck Speeds

Groq’s LPU Inference Engine introduces a new approach to processing LLMs — one that is specifically designed for computationally intense sequential processes like LLMs.

There are two main challenges in running faster inference for LLMs. The first challenge is memory. Despite their size, each individual compute step in an LLM is fairly simple and can be done quickly. However, loading all the data from the memory takes time. This reduces the speed at which we can execute LLMs. While having the weights loaded into VRAM helps, if the memory bandwidth can be increased, we can execute LLMs much faster. The second challenge is that LLMs are sequential in nature. This makes it hard to parallelize their execution.

This means that an LLM is essentially dataflow problem and all we need to do is increase the speed at which the data can be sent to the processing units. Since every in an LLM can be determined at the start, we can plan to have that data available at inference. So Groq came up with a system where numerous chips with small, but very fast memory can operate in unison.

With this architecture, the LPU doesn't just edge out traditional GPUs and CPUs in terms of compute density for LLMs, but it also sidesteps the notorious external memory bottlenecks. For a deeper technical insight, Groq's architecture is elaborately discussed in their two ISCA papers from 2020 and 2022.

Groq’s Performance

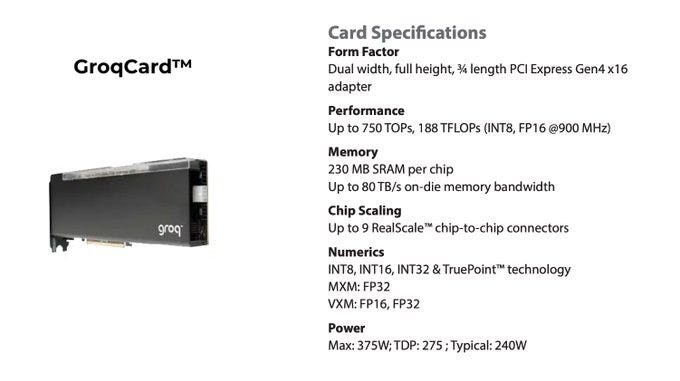

With this architecture Groq has managed to beat other API providers by a wide margin. The numbers are staggering — up to 750 TOPs, 188 TFLOPs, and a memory bandwidth of 80 TB/s. This is not just a marginal improvement but a quantum leap in terms of efficiency and speed, reshaping the landscape of what's possible in AI inference. Below are some plots comparing the latency, throughput and price of Groq vs other API providers.

Groq is in a different league altogether!

Devil in the Details: The Card's Technical Specifications

The GroqCard is available for purchase. From the listing, we can get an idea of what we get for its steep $20k price.

We get a dual-width, full-height, and ¾ length PCI Express Gen4 x16 adapter with a TDP of 275W. Not bad, however, the devil is in the details. The card comes with only 230MB of on-board memory.

To run Mistral 7B FP16, you need around 16GB of memory. To initialize that model with these cards, you need around 70 of them. That equates to about $1.4M! To make matters worse, at 275W of power per card that'd be 19 kWh of power. LLama 70b is 130GB, so you would need 650 of these cards for about $13 million!

These numbers are confirmed by a Groq engineer who said that their Llama2-70B demo runs on a cluster of 576 chips. They are not running the full model though. Instead, they are “running a mixed FP16 x FP8 implementation where the weights are converted to FP8 while keeping the majority of the activations at FP16”.

The modest on-board memory combined with the prohibitive cost makes the GroqCard an exclusive offering not meant for the shallow-pocketed. So who is it meant for?

Tailor-Made for the Titans

Groq's mission is to redefine the standards for GenAI inference speed. Their focus is on “inference speed”. Not on training. With each card's 230 MB SRAM operating at an unparalleled 80 TB/s, it's a device designed for hyperscaling — essential for high-throughput, low-latency inferencing across hundreds of cards. This gives us some insights into the target audience for the GroqCard.

It's not a device you'll find in a startup garage or a hobbyist's desktop. It's engineered for entities capable of deploying entire data center racks, those who need to scale their high-throughput inferencing workloads horizontally.

If you aren't a person or organization that would consider buying thousands of A100s already, then this is not a product aimed at you. So instead of buying thousands of A100s and running several models at a time all doing slow Token/s, you buy a few hundred GroqCards and run just one model super fast. Each card only handles a tiny fraction of the overall model, but it processes so quickly that you end up with higher throughput than an A100 array of much higher cost.

The card's limited memory is a trade-off, allowing for its speed to shine when scaling workloads across a vast array of cards. The card is specifically aimed at a few players who are running LLM APIs at scale.