The Cost of Inference: Running the Models

Calculating the carbon footprint and environmental impact of ChatGPT

In our previous blog post, we discussed the various factors that influence the carbon footprint of LLM models. We also performed a calculation of the carbon footprint associated with the training of several commonly used LLMs and foundational models. In this blog post, we will delve further into model inference's environmental impact and carbon footprint.

The Cost of Inference

Training LLMs like GPT-4 is an intensive process, demanding substantial computational resources. Typically, these models are trained on clusters of GPUs or specialized hardware over months. The power consumption during this phase is significant due to the complexity of tasks like processing vast datasets and continuously adjusting the model's parameters.

Inference, on the other hand, is generally less power-intensive than training. Once a model is trained, running it for inference doesn’t require the same level of continuous, heavy computation. Inference can be performed on less powerful machines, including CPUs (though usually not the case for LLMs), depending on the model's complexity and the task at hand.

The training phase is responsible for the bulk of the carbon footprint associated with LLMs. The extensive use of thousands of high-powered GPUs and the duration of training contribute significantly to greenhouse gas emissions.

The carbon footprint of the inference phase is markedly lower compared to training. Since inference requires fewer computational resources, the associated emissions are correspondingly reduced. However, it's crucial to consider the frequency of inference operations. In applications where LLMs are queried incessantly, the cumulative carbon footprint can become substantial.

The real-world environmental cost of using LLMs hinges on the scale and frequency of their application. Services that continuously rely on these models for real-time responses, like chatbots or content generation tools, can accumulate significant energy usage over time.

Inference at Meta

Meta has been notably transparent about the environmental impact of its AI operations. In a paper, they disclosed that power is allocated in a 10:20:70 ratio within its AI infrastructure across three key phases: Experimentation, Training, and Inference—with Inference consuming the lion's share.

This distribution reflects a crucial aspect of AI usage: while Experimentation and Training are intensive, they are finite phases, Inference is a long running process. As such, the carbon emissions from Inference accumulate over time, potentially surpassing the total emissions from the initial training of the model.

The diagram from the paper showcases the operational carbon footprint of various large-scale machine learning tasks. The black bars represent the carbon footprint during the offline training phase. This phase has a substantial carbon impact, indicating the significant energy required to process and learn from massive datasets.

The orange bars, although fewer, indicate that the models undergoing online training also contribute notably to carbon emissions. Online training allows models to update and refine their learning continuously, which, while beneficial for performance, adds to the carbon footprint.

The patterned bars illustrate the carbon footprint during the inference phase. For many models, this footprint is smaller per unit of time compared to training phases. However, because inference is ongoing, these emissions will accumulate and in many cases eclipses the one-time training emissions, especially for heavily used models.

Real-World Implications: LLMs in Daily Use

As we have established, the environmental cost of LLMs depends on the scale of their application. For instance, energy consumption becomes a crucial factor with the increasing use of models like ChatGPT as search engines. A single ChatGPT query might consume around 0.3 kWh, compared to a mere 0.0003 kWh for a standard Google search. This means GPT-3's energy consumption is roughly 1000 times more than a simple Google search, highlighting the significant environmental impact of frequent LLM usage.

How was the energy usage calculated?

Unfortunately, we do not know much about the inference infrastructure of OpenAI. Sam Altman provided some insights into this in a tweet where he suggested that a single prompt costs "probably single-digits cents,” which gives it a worst-case scenario of being $0.09 per request.

This stack exchange answer delves into how this figure is arrived at and what it means in more tangible terms.

The cost of processing an AI request is not just a matter of computational power but also involves significant energy consumption. Altman estimates that at least half of the cost of a single AI request can be attributed to energy usage. Considering the energy cost at $0.15 per kilowatt-hour (kWh), we can dissect the expenses further:

Cost per Request: $0.09

Proportion of Energy Cost: 50% of the total cost

Energy Price: $0.15 per 1 kWh

Using these figures, the energy consumption per AI request can be calculated as follows:

Plugging in the numbers, we get an Energy Consumption of 0.3kWh/request

This translates to 300 watt-hours (Wh) per request.

Consider the energy required to charge a smartphone to put this into a more relatable context. An average smartphone charge might take about 5Wh. Therefore, the energy used for a single request to ChatGPT is equivalent to charging a smartphone 60 times!

Calculating the Carbon Footprint of GPT4

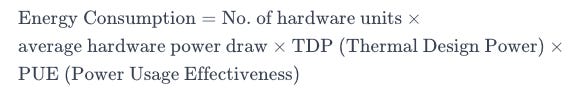

The energy consumption of LLMs in a given time frame (say, one hour) can be calculated using the formula:

From leaks and tweets, we can get an estimate about the infrastructure running ChatGPT:

Number of Hardware Units: 28,936 Nvidia A100 GPUs

TDP: 6.5 kW/server

PUE: 1.2 (a measure of how efficiently a data center uses energy). This number is reported by Azure.

This formula gives us the total energy consumption for the ChatGPT infrastructure in one hour.

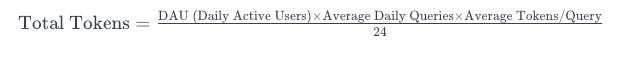

Next, we need to determine the total number of tokens generated by ChatGPT in one hour:

From leaks and industry estimates, we know the following:

DAU: 13 million

Average Daily Queries/User: 15

Average Tokens/Query: 2,000

With this data, we can finally calculate the Energy needed to generate each token. We can use that to calculate the carbon footprint:

Energy per Token: Based on the energy consumption and total tokens calculated.

gCO2e/KWh: 240.6 gCO2e/KWh for Microsoft Azure US West

This gives the gCO2e for the operations of GPT-4 to be 0.3 gCo2e for 1k tokens

Estimation for DALL-E 3

For DALL-E 2, the estimated carbon footprint was 2.2 gCO2e per image. Assuming technological advancements and increased efficiency, but also the increased complexity of DALL-E 3, we can hypothesize that DALL-E 3 might have a carbon footprint of at least 4 gCO2e per image.

Typical ChatGPT Emissions

So a typical query with 1 thousand tokens and two generated images will release approximately 8.3 gCO2e of carbon, equivalent to charging one smartphone or driving 30 meters in a gas-powered car.

Carbon ScaleDown

As Foundational Models like GPT-4 and DALL-E become more ubiquitous, it becomes increasingly clear that these powerful also bring significant environmental costs. We must weigh the incredible capabilities of LLMs in enhancing our digital experiences against the environmental impact they carry.

In line with this, we are excited to announce the development of Carbon ScaleDown, a chrome extension designed to track the carbon emissions of your AI interactions. By providing real-time insights into the carbon footprint of your ChatGPT usage, we hope this extension empowers you to make more informed decisions about your digital consumption.

How it works

Every ChatGPT conversation is made up of tokens, which are essentially chunks of text that the AI processes. We use the 'Tiktoken' library to count the tokens in the chat. We also keep a tab on the number of images generated during your chats. This is important because image generation typically requires more computational power than text, thus having a higher carbon footprint.

The extension calculates the carbon footprint with the data on tokens and images. It uses predefined metrics that estimate the energy consumption per token and image, considering the average energy mix used to power the ChatGPT servers. These calculations translate digital activity into measurable environmental impact, expressed in grams of CO2 equivalent (gCO2e).

All the data is accessible through a user-friendly web application, where you can visualize your cumulative impact over time. This feature aims to increase awareness and encourage more sustainable digital practices.

As such, the extension continuously recalculates the carbon footprint with each new chat. This real-time updating ensures users have the latest information on their environmental impact.

Conclusion

Understanding the energy consumption and carbon emissions associated with a single AI prompt brings to light the broader issue of sustainability in the AI sector. It's not just about the financial cost of running these models; it's about their long-term impact on our planet. This realization underscores the importance of ongoing research, development, and awareness within the AI community. We need to continually seek ways to optimize the efficiency of these models and reduce their environmental footprint.