When LLMs Made Everyone a Coder

This story starts with Jenny Erpenbeck's "Kairos," a novel that arrived in my mailbox a week after I moved to Berlin. Perfect timing, I thought. A story about 1960s East Berlin would be my gateway to understanding this complex city I now call home.

The book follows two lovers - nineteen-year-old Katharina and Hans, who is thirty-four years her senior. But what captivated me most wasn't just their romance but how Berlin itself became a character. The narrative weaves through Alexanderplatz meanders down Unter den Linden, and lingers at the Weidendammer Bridge. Each location carries the weight of history, transforming from mere settings into portals to Berlin's past.

I took endless screenshots of passages mentioning places, determined to visit them all. My photo gallery became a mess of text snippets: "Meet at Alexanderplatz," "The empty streets of Friedrichstraße," and "Walking past the Humboldt University." After the fiftieth screenshot, I had my "there has to be a better way" moment.

Enter 2023 - the year when ChatGPT convinced every Product Manager they could code. You know the type: "Why do we need engineers when AI can write code?" I decided to play this role myself, partly as an experiment and partly as satire. Armed with zero coding knowledge but infinite AI-powered confidence, I set out to build an app that would map literary journeys.

The concept was simple: Upload any book in PDF format, extract locations mentioned in the text, and generate a custom tour map. How hard could it be? GPT made it seem easy, spitting out a complete Python notebook within minutes.

The code looked clean, professional even. It worked with my copy of Kairos. I felt invincible! But then came the reality check. The location extraction was based on a predefined list of places - basically a hardcoded cheat sheet. It wasn't actually "reading" the book at all.’

This was my first lesson in the gap between AI-generated code and real problem-solving. Sure, GPT could write syntactically correct Python, but it couldn't grasp the nuanced challenge of extracting meaningful locations from literary text. It was the coding equivalent of using a calculator without understanding math.

I could almost hear my imaginary engineering team snickering. Here I was, another Product Manager who thought AI would make their jobs obsolete, learning that there's more to software development than just writing code that runs.

The v0 Experience

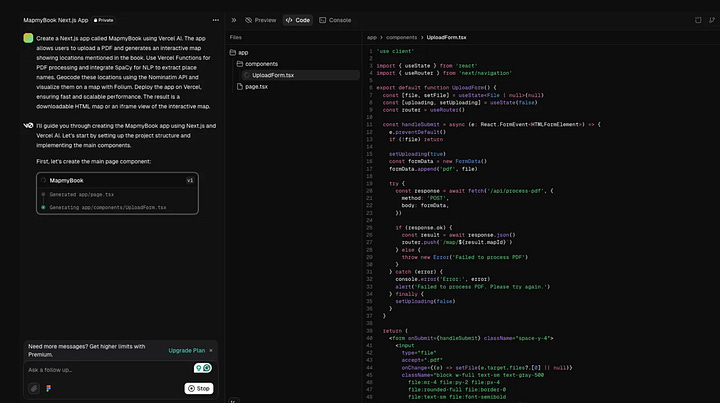

Remember how I left off feeling humbled by GPT's limitations? Well, hold my coffee, because I discovered Vercel's V0. It promised to be the ultimate "PM who thinks they can code" enabler. Just feed it a prompt, and it would build an entire app!

And boy, did v0 deliver... sort of. The code looked beautiful, with proper imports, state management, and error handling - everything a real developer would write.

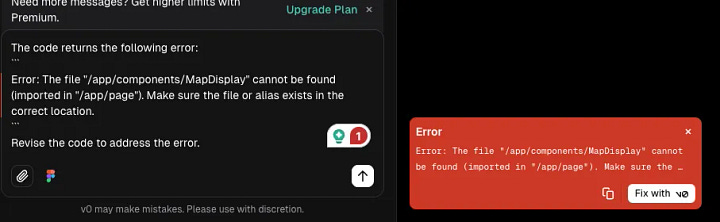

But here's where things got interesting (read: frustrating). v0 would generate code, introduce an error, and then offer to fix that error, which would introduce another mistake. It was like watching a robot play whack-a-mole with bugs.

As someone who can't read JavaScript beyond recognizing that it is, in fact, JavaScript, I was trapped in an AI-generated purgatory. I couldn't tell if v0 was fixing things or making them worse. It was like being a restaurant critic who can't taste food - I could see the code was there but had no idea if it was any good.

The real kicker? Each "fix" consumed more tokens. v0 was essentially charging me to fix its own mistakes. It was like hiring a contractor who breaks your window while repairing your door and then charges you to fix the window.

Trying out Bolt

After my adventures with v0, I discovered Bolt. What makes Bolt different from other AI coding tools is that it's not just generating code - it's running it too. Thanks to something called WebContainers, Bolt can actually install packages, run Node.js servers, and handle the entire development process right in your browser. No local setup required. For a PM like me who still thinks "npm" is a typo, this seemed perfect.

The pitch was compelling: "Whether you're an experienced developer, a PM or designer, Bolt allows you to build production-grade full-stack applications with ease." Finally, I thought, an AI tool that understands me - the enthusiastic but clueless PM who thinks they can code!

At first, Bolt seemed more... considerate? Unlike v0's "YOLO, let's write code" approach, Bolt actually stopped to point out potential issues:

"This project would require server-side processing for PDF handling and NLP..."

"SpaCy is a Python library that cannot be run directly in the browser..."

"Folium is also a Python library that generates HTML/JavaScript..."‘

It was like having a responsible engineer friend saying, "Hey, maybe we should think this through?" But then, in true AI fashion, it proceeded to ignore its warnings and generate code anyway

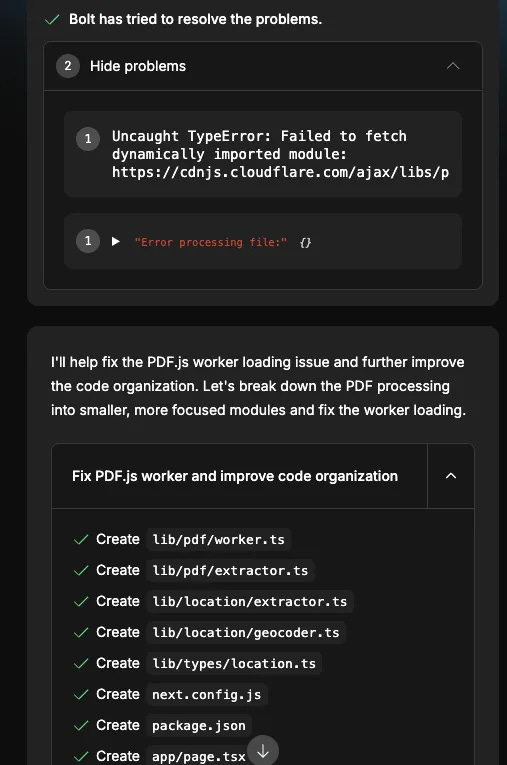

What followed was a lot of errors

Bolt's solution? "I'll help fix the PDF.js worker loading issue and improve code organization!" It then proceeded to create a bunch of new files. Each "fix" spawned new files, which spawned new errors, which required new fixes. It was like watching a digital version of "If You Give a Mouse a Cookie" - but with TypeScript errors instead of cookies.

The most entertaining part? Bolt would proudly announce "I've made several important fixes and improvements!" right before nothing would display on the screen. It was like a contractor saying "I've fixed your plumbing!" while standing in ankle-deep water.

Back to Basics: A Streamlit App

After my adventures with v0 and Bolt, I finally had my "back to basics" moment. Instead of trying to build a complex AI-powered application, I decided to create a simple Streamlit app that would do one thing well: map locations from a book.

The key was going step by step, line by line. No AI prompting, no magic - just basic Python and Streamlit. Here's what I learned:

Read Every Line of Code The biggest lesson? Actually read the code. When I used AI tools, I'd just copy-paste and hope for the best. But going through each line revealed simple issues that would have been maddening to debug later. For instance, I caught a function name mismatch -

load_nlp_model()vsload_spacy_model()- something the AI tools kept mixing up.

Start Simple, Then Build Instead of trying to build everything at once, I broke it down:

- First, get a PDF upload working

- Then extract text from the PDF

- Next, identify locations using spaCy

- Finally, plot these on a mapEach step was testable and debuggable. When something broke, I knew exactly where to look.

The Power of Streamlit: No complex frameworks, no elaborate setups - just straightforward Python with Streamlit. The result was a clean, functional app that:

Has a split layout for better organization

Processes PDFs efficiently

Creates an interactive map

Provides a context-aware tour guide

Future-Proofing Your App Here's a thought that occurred to me: As the internet becomes increasingly saturated with AI-generated content, apps that don't rely on LLM APIs might be more sustainable. They're:

More reliable (no API dependencies)

Cheaper to run (no token costs)

Easier to maintain (simpler codebase)

More predictable (no AI hallucinations)

The irony isn't lost on me - after trying to use AI to build my app, I ended up writing it myself. But here's the thing: AI tools are great for learning and prototyping, but sometimes the best solution is the simplest one.

The Final Lesson Being a PM who can code doesn't mean relying on AI to do all the work. It means understanding enough about coding to:

Break down problems into manageable pieces

Read and understand basic code

Know when to use AI tools and when to code manually

Appreciate the complexity of what engineers do

So while AI coding tools are impressive, they're best used as assistants, not replacements. Sometimes the best way to build something is to roll up your sleeves and write the code yourself - even if you're a PM who's just learning to code.

Let me add one final, crucial observation from this journey: what companies rushing to replace engineers with AI tools are missing: We don't need fewer engineers in the AI era - we need engineers who can debug AI-generated code. Think about my experience:

v0 generated perfectly formatted, completely broken code

Bolt created elaborate file structures that didn't work

Even my final Streamlit app needed careful debugging to catch silly errors like mismatched function names

Each time an AI spits out code, someone needs to:

Understand what the code is trying to do

Identify why it's not doing it

Figure out how to fix it

Ensure the fix doesn't break something else

The irony is delicious: AI is making engineers more essential, not less. It's like having a really enthusiastic junior developer who can write code at lightning speed but needs someone to review every line. The more code AI generates, the more skilled engineers need to make sense of it.

So, to any tech executives reading this, remember my journey from enthusiastic PM to humbled coder Before you lay off your engineering team in favor of AI coding tools. AI isn't replacing engineers - it's changing their job description from "write all the code" to "make sure the AI's code works."

And to all the engineers who've been laid off with the explanation that "AI can do your job now," - take my story as evidence that it very much cannot. At least, not without someone like you to fix its mistakes.

P.S. If you're a PM considering building an app - start with something simple. Use AI tools to learn, but don't rely on them entirely. And most importantly, read the code. Every. Single. Line.