Your Go-To Guide to Master Prompt Engineering in Large Language Models

Learn about the different Prompt Engineering Techniques

Hello readers! Welcome back to our series on Large Language Models (LLMs). Previously, we took a deep dive into what LLMs are and how to fine-tune them. Now, we're about to explore another crucial area in working with LLMs—Prompt Engineering.

An Introduction to Prompt Engineering

Think of training a pet to perform tricks. To make your dog sit, you don't just yell "Sit!" once. You repeat the command several times, rewarding good behaviour, until your pet understands the command. In many ways, Prompt Engineering is similar. It involves 'teaching' a computer program to comprehend and respond to certain inputs, also known as 'prompts'. The better your prompts, the better the computer's output.

In technical jargon, Prompt Engineering means crafting inputs that guide an LLM like ChatGPT to produce the desired outputs. The trick lies in understanding how these models process inputs and tailoring those inputs for optimal results.

The Parrot Analogy—When to Prompt Engineer

Now let's imagine a scenario. You have a pet parrot that you'd like to teach to sing a specific song. You have to meticulously choose your words (prompts) and instructions, ensuring the parrot understands and responds precisely how you want. It might require time, a few attempts, and some patience.

But there are times when you'd let the parrot be, enjoying its random chatter and songs. Or perhaps you enjoy its song as relaxing background noise. Maybe you don't have time to teach the parrot a new song, and you're content with whatever it chooses to sing.

This 'Parrot Analogy' is a fun and simple way to grasp when to (and when not to) apply Prompt Engineering in the realm of LLM. You need to practice it when you require more controlled outputs from an LLM or when dealing with complex queries. It's also useful when introducing new knowledge beyond the LLM's training data, helping to guide the learning process.

On the flip side, for simple interactions with the LLM—like asking for definitions or engaging in open-ended conversation—you may not need Prompt Engineering. Also, if you lack the time, expertise, or resources for extensive testing and refining of prompts, diving into Prompt Engineering might not be the best choice.

The Crossroads—Fine-Tuning vs. Prompt Engineering

When deciding between fine-tuning and prompt engineering, consider your resources and the nature of the task. If you need the model to perform a new task or behave in a very specific way and have substantial computational resources and task-specific data, fine-tuning might be your best bet.

However, if you just need the model to respond in a particular way to a specific prompt and have the expertise to design and test these prompts, then prompt engineering is your go-to tool. While it is generally less resource-intensive than fine-tuning, prompt engineering still requires a good understanding of the model's functionality and a clear aim for your desired output.

Deciding to Do Prompt Engineering

To decide if you should opt for prompt engineering, first, identify your project's needs. If your interaction with the LLM model is simple and general, you may use the LLM as is. However, if the requirements are complex and specific, evaluate your resources. Do you have access to substantial task-specific data and computational resources? If yes, consider fine-tuning the LLM. If not, you should assess your capabilities and time commitment. If you can dedicate time to iteratively experiment with prompts, then prompt engineering should be your choice.

Types of Prompt Engineering

Phrasing your prompt can significantly impact the response from an LLM. Therefore, an effective prompt is vital. But what exactly is an effective prompt? Let's explore some types:

Basic Prompting

This is straightforward—ask your question or state your command to the model. For example, you might ask the model to translate a sentence from English to Spanish. The complexity and specificity of the task determine whether you'll need to move beyond basic prompting. There are two types of Basic Prompting:

Zero-shot learning:

Zero-Shot Learning involves feeding the task directly to the model without any prior examples. This is like asking a friend a question out of the blue and expecting them to understand and answer it accurately.

Zero-shot learning is a concept in machine learning where a model makes predictions or decisions about unseen data without any prior examples.

In the context of language models like GPT-3, zero-shot learning means giving the model a task without providing any examples of that task. The model must use its training and general knowledge of language to figure out what kind of response is expected.

Few Short Learning

Few-shot learning presents a set of high-quality demonstrations on the target task before asking the model to perform a similar task. This is like showing your friend a few examples of similar questions and their answers before asking your question. It gives the model some context and guidance about what is expected.

Few-shot learning, on the other hand, is a concept where a model is able to make decisions or predictions about unseen data after being given a few examples.

In the context of language models, few-shot learning means giving the model a few examples of the task before asking it to complete a similar task. This is done to provide some context and guidance about what is expected.

Instruction Prompting

Here, the task requirements are explicitly stated in the prompt. For example, you might ask the model to summarize a text but also specify that the summary should be in bullet points and must not exceed five lines.'

For example, if you want a language model to write a story about a unicorn in a magical forest, you could simply prompt it with "Write a story about a unicorn in a magical forest". This is a simple prompt without specific instructions.

But with instruction prompting, you could say, "Write a story for children aged 6-8 years old about a friendly unicorn living in a magical forest filled with talking trees and magical creatures. The story should be fun, educational, and have a positive message about friendship." This is a more detailed instruction prompt that guides the model to produce a specific type of output.

In-Context Instruction Learning

This technique combines multiple demonstrations across different tasks. By providing contextual examples, you can guide the LLM to produce the desired output.

In this example, the language model has not been explicitly trained to convert sentences from active to passive voice. However, by providing examples (few-shot learning) within the prompt, the model is guided to understand the pattern and structure that you want.

Chain of Thought Prompting

Some tasks may be too complex to express in a single prompt. This approach breaks down a complex task into a sequence of simpler tasks, making it easier for the model to handle.

This method generates a sequence of short sentences to describe the reasoning logic step by step, known as reasoning chains or rationales. This step-by-step approach can lead the model to the final answer in a more controlled and understandable manner.

Let's say you want the LLM to reason about a hypothetical situation where a new infectious disease is spreading, and you want to know if closing schools would be a good strategy to slow down the spread. This is a complex question that requires an understanding of multiple domains (epidemiology, social dynamics, etc.) and can't be answered directly without adequate context.

Instead of asking the LLM directly, you could break down the task using CoT Prompting:

"Describe how infectious diseases spread in a population."

"Explain how school environments might contribute to the spread of infectious diseases."

"Discuss the potential impacts on disease spread if schools were closed."

"Considering all the above, would closing schools be a good strategy to slow down the spread of a new infectious disease?"

Self Consistency Sampling

This method involves generating multiple responses and evaluating them to select the best. It's like brainstorming, where you generate many ideas and then pick the one that best meets your criteria.

Imagine you're in a debate club, and you're asked to prepare arguments for a topic. You wouldn't just come up with one argument and immediately present it, right? You'd probably brainstorm several different arguments, evaluate each one, and then select the strongest one to present to the club. This is essentially what self-consistency sampling does. The language model generates multiple responses (the "arguments"), evaluates them, and then selects the one that is most self-consistent and makes the most sense in the given context.

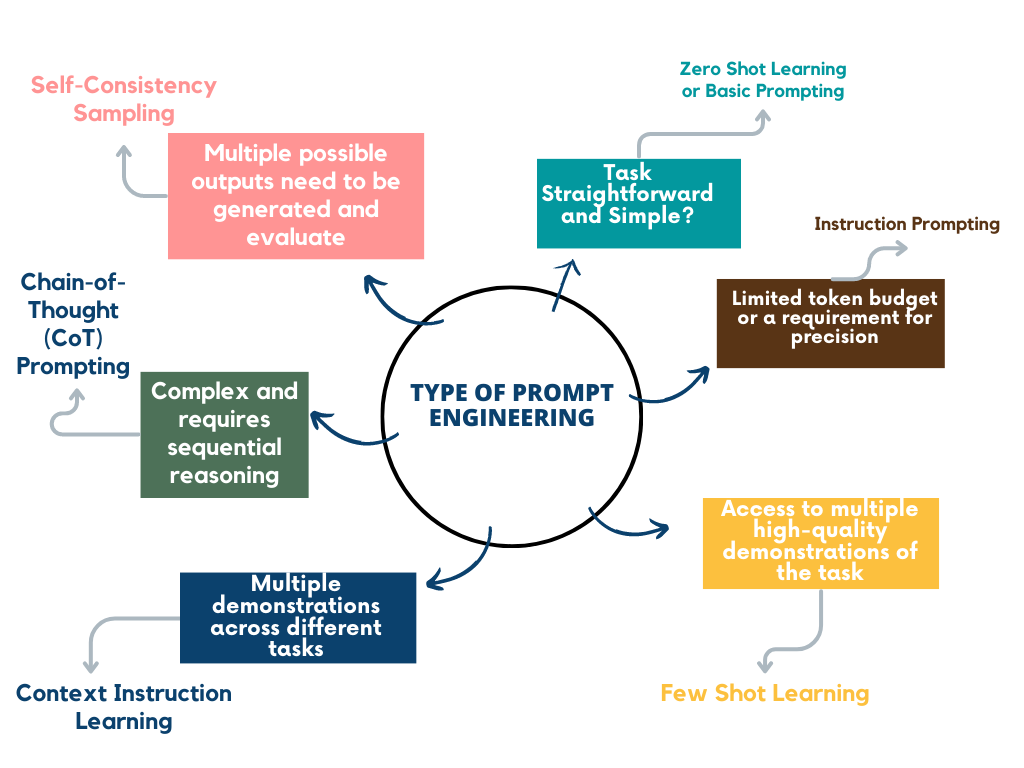

Deciding which type of Prompt Engineering to pick

Let's say a technology company is working on developing an ML system to handle customer service inquiries.

Task Simplicity: The Company starts by assessing the complexity of its task. Customer service inquiries can range from simple queries about their products or services to more complex problems that need troubleshooting. These inquiries are not straightforward and simple. So, they move on to the next step.

Token Budget and Precision Requirements: The company has a fairly generous token budget, but they want to ensure the responses to customer inquiries are precise and helpful. However, the diverse nature of customer inquiries makes it challenging to formulate a clear set of instructions for the LLM to follow. So, they decide Instruction Prompting might not be the most suitable option and move on to the next consideration.

Access to High-Quality Demonstrations: Fortunately, the company has years of customer service logs that include a wide variety of inquiries and their corresponding responses. They can use this wealth of high-quality demonstrations to train the LLM model. Therefore, Few-Shot Learning seems like an excellent fit. They can feed the model examples of past inquiries and responses, which should help the LLM understand and reproduce the correct types of responses.

Multiple Demonstrations Across Different Tasks: If the company didn't have such a comprehensive set of customer service logs for a single task, but they had examples of different types of tasks, they could have considered using In-Context Instruction Learning. However, in this case, it's not necessary as they proceed with Few-Shot Learning.

Complex Tasks and Sequential Reasoning: Assuming some of the inquiries the company receives require complex troubleshooting that needs sequential steps, they might want to employ Chain-of-Thought (CoT) Prompting in combination with Few-Shot Learning for these specific tasks.

Need for Multiple Outputs: To handle inquiries where there isn't a single correct answer, like providing suggestions for product usage; the company could consider using Self-Consistency Sampling. This would allow the LLM to generate a variety of responses and evaluate the best one.

Consultation with a Specialist: Even after successfully implementing your tech product, the company would likely benefit from regular consultations with specialists. They can help identify areas for improvement, refine the task as needed, and suggest additional approaches if necessary.

By following this decision tree, the company can determine the best prompt engineering techniques to train their LLM model effectively, ensuring high-quality customer service responses.

In the next chapter of our series, we'll be exploring 'reward modelling', a fascinating concept that's akin to offering treats to your pet for good behaviour. Reward modelling is a mechanism to enhance the performance of our LLM 'parrot', not just by using the right commands but also by offering the right incentives for each task.

So, buckle up and prepare to delve deeper into the fascinating world of LLMs. As we set sail on this new adventure, we can look forward to more tales of parrots and pets, of melodies and songs, and of crafting the perfect conversation with our LLM companions.

Thank you for accompanying us on this enlightening journey through the art of prompt engineering. Keep learning, keep exploring, and most importantly, keep having fun. Until next time!