Vector Database Design Patterns

An introduction to vector databases and how to use them in LLM applications

Traditionally, databases have been widely used for storing data in various forms, such as text, numbers, or files. Depending on the nature of your application and the structure of your data, you can choose from a range of databases or even opt for file storage databases like S3. These conventional databases allow you to store and retrieve data efficiently, serving as the backbone for many applications.

However, the process becomes more complex when dealing with machine learning (ML) models. In such scenarios, you would typically run a query on your database to fetch the relevant data, process it, pass the processed data to the ML model to obtain an output, further process the output, and finally serve the response to your client. The result generated by most ML models is a vector—a one-dimensional array of numbers. This vector represents the model’s understanding and representation of the input data. Notably, inputs that are similar to each other produce vectors that are also similar. The similarity between vectors can be quantified by calculating the distance between them in the vector space. Leveraging this fundamental property of models and vectors opens up opportunities to build applications and solve complex tasks more effectively. However, to fully capitalize on vector data, we need a seamless and efficient way of working with it. This is where vector databases come into play.

This blog will examine the advantages of using vector databases and patterns for building applications leveraging vector data.

The Benefits of Vector Databases in LLM-Based Applications

Vector databases are designed specifically for storing and managing vectors, enabling efficient comparison and retrieval of similar vectors within the database. They typically employ a vector index, which optimizes the storage and retrieval of vectors, along with various querying methods to find similar vectors quickly. Additionally, vector databases can store additional metadata related to the embeddings, such as the input used to create the embedding.

Let’s consider an example scenario involving a database of questions and answers. Your application provides answers to user queries. One of the questions stored in your database could be, “What is the capital of France?” However, users may ask the same question differently, such as “Can you tell me the capital city of France?” or “What city is considered the capital of France?” While the intent of these questions is identical, it can be challenging to identify this similarity. However, by creating embeddings of these questions, the distances between their corresponding vectors in the vector space will be close to the original question’s vector. Leveraging this similarity, your application can efficiently serve the same answer to all these question variations.

With the rise of LLM (Large Language Model) applications, vector databases have gained significant popularity due to their ability to find semantically similar items. These databases offer many advantages that make them powerful tools in this domain.

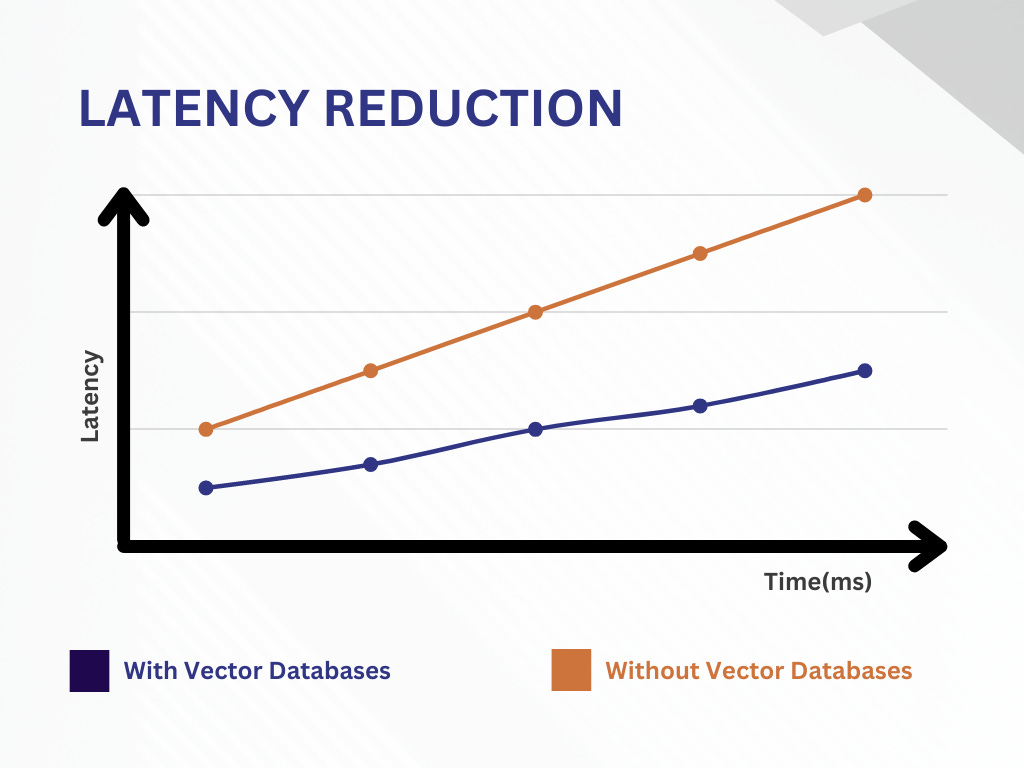

Latency Reduction

LLMs are powerful models, but obtaining results from them can be time-consuming. We can retrieve cached results by leveraging vector databases instead of making new API calls to the LLM. With vector databases, semantically similar inputs can be retrieved even if they don’t precisely match the user’s submitted queries. This caching mechanism significantly reduces latency, allowing for faster response times and a smoother user experience. With the ability to fetch pre-calculated results from the vector database, we eliminate the need to repetitively query the LLM for frequently asked questions, optimizing overall system performance.

Cost Optimization

One of the significant challenges associated with LLM-based applications is the cost of calling LLM APIs. These API calls can quickly become prohibitively expensive, potentially rendering a business unsustainable. However, vector databases provide an alternative approach. By storing high-quality answers in the database for common questions, we can serve these saved responses directly, eliminating the need for costly API calls. This strategy reduces expenses and enables businesses to scale their applications more efficiently.

Reducing Hallucinations

LLMs generate text, but they are not immune to occasional “hallucinations” or fabricating answers that may not be accurate or relevant. To address this challenge, vector databases offer a valuable solution. By leveraging the database to find text relevant to the user’s query that can serve as context, we can provide this context to the LLM when creating an output. By offering meaningful and contextually appropriate information, the chances of the LLM producing hallucinations or generating incorrect answers are significantly reduced. This helps ensure the reliability and accuracy of the LLM outputs, enhancing the overall user experience and instilling trust in the system.

Vector Database workflow

Embedding Inputs

The first step in working with a vector database is to embed the inputs. This process involves converting the input data into numerical representations (vectors) that capture the essential features and semantics of the data. Various techniques can be employed to generate embeddings, such as pre-trained language models (e.g., GPT) or domain-specific embedding models tailored to the specific task or dataset. Embedding models have also been created for tabular data, audio and images. These models map the input data to high-dimensional vector representations, preserving the contextual and semantic information required for effective retrieval.

Embedding Long Inputs

In many applications, input data can be long and exceed the maximum token limit imposed by models or vector databases. To handle such cases, additional steps are required to embed long inputs effectively.

One approach is to divide the long input into smaller chunks or segments and embed each piece individually. For example, lengthy documents can be split into paragraphs or sections in a document search application, with each section embedded separately. This allows for more granular indexing and retrieval of relevant segments based on user queries.

By breaking down long inputs into smaller parts and embedding them individually, the vector database can efficiently handle large volumes of data while preserving the semantic relationships within the information.

Visualizing Embeddings

While embeddings make sense to models, they can be challenging for humans to interpret and understand. However, visualizing embeddings is crucial for ensuring the effectiveness of the embedding model and gaining insights into the relationships it has learned, which can be utilized to mitigate bias.

Several popular tools exist for visualizing embeddings, such as Nomic’s Atlas and TensorFlow’s Embedding Projector. These tools enable developers to understand the embeddings visually, evaluate their performance and uncover valuable insights about the relationships and patterns learned by the model, potentially exposing bias in the model as well.

Examples of solving different applications

So how can applications leverage vector databases? In the section below, we will look at some common patterns in which vector databases are integrated in LLM applications.

Question-Answering (QA)

Question-answering is one of the most common use cases for vector databases. In this scenario, a user poses a question, and to ensure accurate answers without hallucinations, providing contextual information becomes crucial. To tackle this, your vector database should already contain the relevant documents from which you want to retrieve answers.

For instance, consider a question-answering system built on Wikipedia data. In this case, you create chunks of each article, generate embeddings for each chunk, and upload them to your vector database. When a user submits a question, you generate an embedding for the question and search for similar embeddings in the database. Ideally, the retrieved embeddings will contain the context necessary for the LLM to provide an answer. To facilitate this, you can present a prompt to the LLM, such as:

“Answer the following question:

{question}

Using information from the context below:

{context}"

Image Search

Image search follows a similar approach to question-answering. In this case, your vector database already contains vectors representing images, such as your photo library. Suppose you capture a picture of a stunning sunset and want to find other sunset pictures you have taken. You can identify other images that share visual similarities by creating an embedding for your sunset picture and searching for similar embeddings in the vector database. Unlike question-answering, prompts are unnecessary here since the objective is to find similar images rather than asking questions about the image.

Long-Term Memory

One notable feature of ChatGPT is its ability to maintain context from previous conversations. For instance, when you ask ChatGPT to “tell me more” or “elaborate,” it understands that you want additional information related to its previous answer.

Internally, ChatGPT is provided with your current question as a prompt and with the entire previous conversation, enabling it to maintain contextual understanding. However, this context can be lost if the conversation becomes too long and exceeds the token limit. To address this, you can employ vector databases to extract relevant portions of the chat and provide them as context. This allows you to query the current chat and past chats that occurred days or even weeks ago, ensuring a more seamless and natural flow in chat-based applications.

Caching Common/Past Answers

As mentioned earlier, vector databases offer significant cost savings and reduced latency. Caching answers to frequently asked questions is one effective approach. In this scenario, a database is created with question-answer pairs. The questions are embedded into the vector database, and new questions are matched with past questions. By returning the answer associated with past questions, you avoid querying an LLM, thereby saving costs and delivering answers faster.

To enhance this approach further, you can save embeddings of answers in the vector database. Instead of simply returning the cached answer, you can provide the past answer as context to the LLM, presenting it as an “ideal” answer. This allows for more nuanced responses and can improve the answers' quality.

This is just the tip of the iceberg of how vector databases are being used. Other applications include zero-shot classification, audio search, anomaly detection, multi-modal text and image search, and recommendation systems!